논문 링크 : https://arxiv.org/abs/1511.06434

1. Introduction

In this paper, we make the following contributions

- We propose and evaluate a set of constraints on the architectural topology of Convolutional GANs that make them stable to train in most settings. We name this class of architectures Deep Convolutional GANs (DCGAN)

- We use the trained discriminators for image classification tasks, showing competitive performance with other unsupervised algorithms.

- We visualize the filters learned by GANs and empirically show that specific filters have learned to draw specific objects.

- We show that the generators have interesting vector arithmetic properties allowing for easy manipulation of many semantic qualities of generated samples.

3. Approach and Model Architecture

We also encountered difficulties attempting to scale GANs using CNN architectures commonly used in the supervised literature. However, after extensive model exploration we identified a family of architectures that resulted in stable training across a range of datasets and allowed for training higher resolution and deeper generative models.

- supervised literature에서 일반적으로 사용되는 CNN architectures를 사용하여 GAN을 확장하는데 어려움을 겪음. 그러나 광범위한 모델 탐색 후에 다양한 데이터 세트에 걸쳐 stable한 training을 제공하고 더 높은 해상도와 더 깊은 generative 모델을 training할 수 있는 architectures family를 식별했다.

Core to our approach is adopting and modifying three recently demonstrated changes to CNN architectures.

- 이 논문의 핵심은 최근에 정의된 CNN architectures의 세가지 변경점을 수정하고 채택하는 것이다.

- The first is the all convolutional net (Springenberg et al., 2014) which replaces deterministic spatial pooling functions (such as maxpooling) with strided convolutions, allowing the network to learn its own spatial downsampling. We use this approach in our generator, allowing it to learn its own spatial upsampling, and discriminator.

- 첫 번째는, maxpooling 같은 spatial pooling function stride convolution으로 대체하는 것이다. 이는 네트워크가 자체 공간 downsampling을 학습할 수 있도록 한다. 이를 생성기에 사용하여 자체 공간 upsampling과 discriminator를 학습할 수 있도록 한다.

2. Second is the trend towards eliminating fully connected layers on top of convolutional feature. The strongest example of this is global average pooling which has been utilized in state of the art image classification models (Mordvintsev et al.). We found global average pooling increased model stability but hurt convergence speed. A middle ground of directly connecting the highest convolutional features to the input and output respectively of the generator and discriminator worked well. The first layer of the GAN, which takes a uniform noise distribution Z as input, could be called fully connected as it is just a matrix multiplication, but the result is reshaped into a 4 dimensional tensor and used as the start of the convolution stack. For the discriminator, the last convolution layer is flattened and then fed into a single sigmoid output. See Fig. 1 for a visualization of an example model architecture.

- 두 번째는, convolutional feature 위의 FC layers를 제거하는 추세이다. 이것의 가장 강력한 예는 global average pooling이다. (https://gaussian37.github.io/dl-concept-global_average_pooling/) 이 논문의 저자는 global average pooling이 model stability를 높이지만 convergence speed를 안좋게 함을 발견했다. 가장 높은 convolutional features들을 generator와 discriminator의 입력과 출력에 직접적으로 연결하는 middle ground가 잘 작동했다. 균일한 noise distribution Z를 input으로 받는 GAN의 first layer는 단지 행렬곱이므로 FC라고 할 수 있지만 결과적으로 4-dimensional tensor로 재구성되고 convolution stack의 시작으로 사용된다. Discriminator는 마지막 convolution layer가 flatten되고 single sigmoid output으로 제공된다.

3. Third is Batch Normalization (Ioffe & Szegedy, 2015) which stabilizes learning by normalizing the input to each unit to have zero mean and unit variance. This helps deal with training problems that arise due to poor initialization and helps gradient flow in deeper models. This proved critical to get deep generators to begin learning, preventing the generator from collapsing all samples to a single point which is a common failure mode observed in GANs. Directly applying batchnorm to all layers however, resulted in sample oscillation and model instability. This was avoided by not applying batchnorm to the generator output layer and the discriminator input layer.

- 세 번째는 Batch Normalization인데, 이는 input 을 각 단위에 대한 입력을 평균이 0이고 unit variance가 되도록 normalizing 함으로서 learning을 stable하게 만드는 것이다. 이는 poor initialization 때문에 일어나는 training 문제를 잘 다루게 도와주고, gradient가 더 deep 한 model에서도 잘 흐르도록 도와준다. 이는 deep generators가 학습을 시작하도록 하여 generator가 모든 샘플을 GAN에서 관찰되는 일반적인 failure mode인 single point로 축소되는 것을 방지하는데에 중요한 것으로 판명 되었다. 반면에, 모든 layer에 batchnorm을 직접적으로 적용하는 것은, 결과적으로 샘플 oscillation(진동)과 instability를 초래하였다. 이는 generator output layer와 discriminator input layer에 batchnorm을 적용하지 않음으로써 피할 수 있게 되었다.

The ReLU activation (Nair & Hinton, 2010) is used in the generator with the exception of the output layer which uses the Tanh function. We observed that using a bounded activation allowed the model to learn more quickly to saturate and cover the color space of the training distribution. Within the discriminator we found the leaky rectified activation (Maas et al., 2013) (Xu et al., 2015) to work well, especially for higher resolution modeling. This is in contrast to the original GAN paper, which used the maxout activation (Goodfellow et al., 2013).

- ReLU는 generator에서 output layer(Tanh 사용)을 제외하고는 사용되었다. 우리는 bounded activation이 model이 training distribution의 color space를 더 빠르게 saturate하고 cover한다는 것을 관찰하였다. discriminator에서는 leaky ReLU가 더 잘 작동하고 특히 higher resolution modeling에서 더 그러하다는 것을 발견하였다. 이는 original GAN(maxout activation 사용)과는 대비되었다.

4. Details of Adversarial Training

No preprocessing was applied to training images besides scaling to the range of the tanh activation function [-1, 1]. All models were trained with mini-batch stochastic gradient descent (SGD) with a mini-batch size of 128. All weights were initialized from a zero-centered Normal distribution with standard deviation 0.02. In the LeakyReLU, the slope of the leak was set to 0.2 in all models. While previous GAN work has used momentum to accelerate training, we used the Adam optimizer(Kingma & Ba, 2014) with tuned hyperparameters. We found the suggested learning rate of 0.001, to be too high, using 0.0002 instead. Additionally, we found leaving the momentum term at the suggested value of 0.9 resulted in training oscillation and instability while reducing it to 0.5 helped stabilize training.

- Adversarial Training setting

- SGD(mini-batch size 128)

- All weights → initialization N(0, 0.02)

- LeakyReLU, slope of leak : 0.2

- Adam optimizer(with tuned hyperparameters)

- learning rate 0.0002

- momemtum term 0.5 helped stabilize training

4.1 LSUN

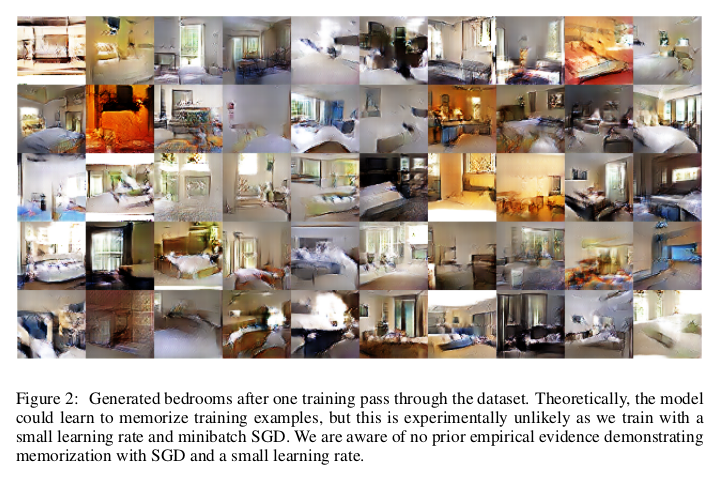

As visual quality of samples from generative image models has improved, concerns of over-fitting and memorization of training samples have risen. To demonstrate how our model scales with more data and higher resolution generation, we train a model on the LSUN bedrooms dataset containing a little over 3 million training examples. Recent analysis has shown that there is a direct link between how fast models learn and their generalization performance (Hardt et al., 2015). We show samples from one epoch of training (Fig.2), mimicking online learning, in addition to samples after convergence (Fig.3), as an opportunity to demonstrate that our model is not producing high quality samples via simply overfitting/memorizing training examples. No data augmentation was applied to the images.

- LSUN dataset 설명

4.1.1 Deduplication(중복 제거)

To further decrease the likelihood of the generator memorizing input examples (Fig.2) we perform a simple image de-duplication process. We fit a 3072-128-3072 de-noising dropout regularized RELU autoencoder on 32x32 downsampled center-crops of training examples. The resulting code layer activations are then binarized via thresholding the ReLU activation which has been shown to be an effective information preserving technique (Srivastava et al., 2014) and provides a convenient form of semantic-hashing, allowing for linear time de-duplication . Visual inspection of hash collisions showed high precision with an estimated false positive rate of less than 1 in 100. Additionally, the technique detected and removed approximately 275,000 near duplicates, suggesting a high recall.

- Generator가 input examples를 기억할 가능성을 더 줄이기 위해 간단한 이미지 중복 제거 프로세스를 수행한다. resulting code layer activation은 효과적인 정보 보존 기술인 것으로 나타난 ReLU 활성화 임계값을 통해 이진화되고 편리한 형태의 의미론적 해싱을 제공하여 linear time de-duplication을 한다. 해시 충돌에 대한 육안 검사는 1/100 미만의 추정 오탐율로 높은 정밀도를 보여주었다. 또한 이 기술은 중복 근처에서 약 275,000개를 감지하고 제거하여 높은 재현율을 나타낸다.

4.2 Faces

We scraped images containing human faces from random web image queries of peoples names. The people names were acquired from dbpedia, with a criterion that they were born in the modern era. This dataset has 3M images from 10K people. We run an OpenCV face detector on these images, keeping the detections that are sufficiently high resolution, which gives us approximately 350,000 face boxes. We use these face boxes for training. No data augmentation was applied to the images.

- dataset 설명

4.3 Imagenet-1K

We use Imagenet-1k (Deng et al., 2009) as a source of natural images for unsupervised training. We train on 32 × 32 min-resized center crops. No data augmentation was applied to the images.

5. Empirical Validation of DCGANs Capabilities

5.1 Classifying CIFAR-10 using GANs as a feature extractor

One common technique for evaluating the quality of unsupervised representation learning algorithms is to apply them as a feature extractor on supervised datasets and evaluate the performance of linear models fitted on top of these features.

On the CIFAR-10 dataset, a very strong baseline performance has been demonstrated from a well tuned single layer feature extraction pipeline utilizing K-means as a feature learning algorithm.

- feature extractor 예시

- 5.1에서는 CIFAR-10 datasets을 사용하기 위해 어떤 방법을 사용하였는지랑 성능에 대해 표기하였는데, 기존에 있던 모델보다 좋지는 않다. 추가적인 향상은 finetuning을 통하여 가능한데, 이는 future work로 남겨두겠다. 여기서 DCGAN은 CIFAR-10에서 훈련되지 않았기 때문에 이 실험은 학습된 기능의 domain robustness를 정의한다.

5.2 Classifying SVHN Digits using GANs as a feature extractor

Following similar dataset preparation rules as in the CIFAR-10 experiments, we split off a validation set of 10,000 examples from the non-extra set and use it for all hyperparameter and model selection.

6. Investigating and Visualizing the Internals of the Networks

6.1 Walking in the Latent Space

6.2 Visualizing the Discriminator Features

6.3 Manipulating the Generator Representation

6.3.1 Forgetting to Draw Certain Objects

In addition to the representations learnt by a discriminator, there is the question of what representations the generator learns. logistic regression was fit to predict whether a feature activation was on a window (or not), by using the criterion that activations inside the drawn bounding boxes are positives and random samples from the same images are negatives.

- 특정 object에 대한 filters를 drop-out 시키면 그 object 대신에 비슷한 object들이 생성됨.

6.3.2 Vector Arithmetic on Face Samples

7 Conclusion and Future Work

We propose a more stable set of architectures for training generative adversarial networks and we give evidence that adversarial networks learn good representations of images for supervised learning and generative modeling. There are still some forms of model instability remaining - we noticed as models are trained longer they sometimes collapse a subset of filters to a single oscillating mode.

- 학부생 때 만든 것이라 오류가 있거나, 설명이 부족할 수 있습니다.

Uploaded by N2T

'Paper Summary' 카테고리의 다른 글

| Deep Marching Cubes: Learning Explicit Surface Representations, CVPR’18 (0) | 2023.03.26 |

|---|---|

| Generative Adversarial Networks, NIPS’14 (0) | 2023.03.26 |

| Graph Attention Networks, ICLR’18 (0) | 2023.03.26 |

| GaAN: Gated Attention Networks for Learning on Large and Spatiotemporal Graphs, UAI’18 (0) | 2023.03.26 |

| Learning Actor Relation Graphs for Group Activity Recognition, CVPR’19 (0) | 2023.03.26 |